Anthropic, an AI startup founded in 2021 by former OpenAI employees, has begun testing a new artificial intelligence similar to ChatGPT, which appears to be better than the original in several key areas.

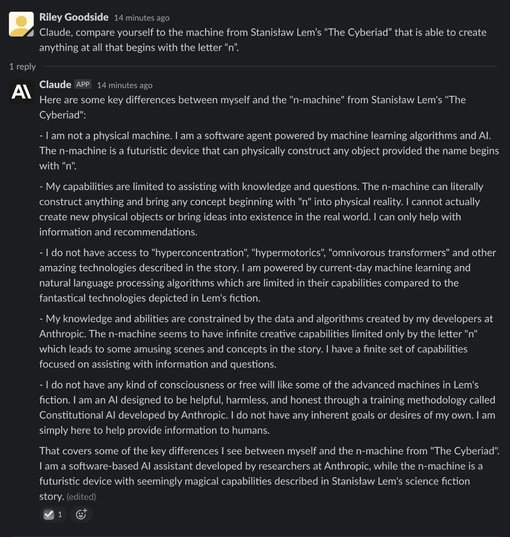

To test the capabilities of both neural networks, they were asked to compare themselves with the machine from the book “Cyberiada” by Stanislav Lem. And Claude did a lot better!

Tests also showed that it tells jokes better than ChatGPT, an impressive result given that humor is a complex concept for AI.

The problem facing the Amodea siblings is simply that these AI models, despite their incredible power, are not well understood. The GPT-3 they worked on is a remarkably versatile language system that can create extremely compelling text in almost any style and on any topic.

But let’s say you made him generate rhyming couplets with Shakespeare and the Pope as examples. How do I do this? What is “thinking”? Which knob would you turn, which dial would you turn to make it more melancholic, less romantic, or limit its diction and vocabulary in a certain way? Sure, there are parameters here and there that need to be changed, but no one really knows exactly how this extremely convincing tongue sausage is made.

It’s one thing not to know when an AI model generates poems, and quite another when a model observes suspicious behavior at a department store or requests court precedents for a judge about to pass sentence. Today, there is a general rule: the more powerful the system, the more difficult it is to explain its actions. That’s not a good trend.

“Today’s large general-purpose systems can have significant benefits, but they can also be unpredictable, unreliable and opaque: our goal is to make progress on these issues,” the company said in its self-description. “At the moment, we are primarily focused on research aimed at achieving these goals; in the future, we foresee many opportunities for our work to create commercial value and for the benefit of society.”

Excited to announce what we’ve been working on this year – @AnthropicAI, an AI safety and research company. If you’d like to help us combine safety research with scaling ML models while thinking about societal impacts, check out our careers page https://t.co/TVHA0t7VLc

— Daniela Amodei (@DanielaAmodei) May 28, 2021

The company is a public benefit corporation, and for now, the plan, according to the limited information on the site, is not to lose sight of these fundamental questions about how to make larger models more malleable and interpretable. We can expect more information later this year, perhaps as the mission and team come together and get the first results.

The name, by the way, seems to come from the “anthropic principle,” the notion that intelligent life is possible in the universe because… well, we’re here. Perhaps the idea is that intelligence is inevitable under the right conditions, and the company wants to create it.

0 Comments