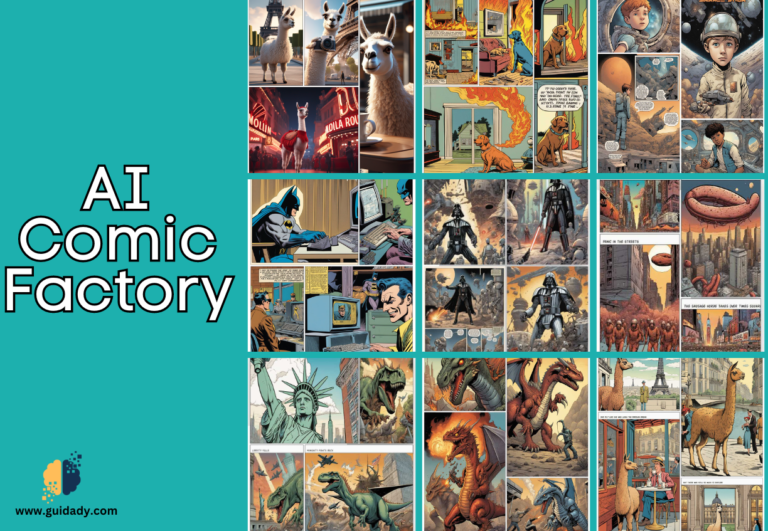

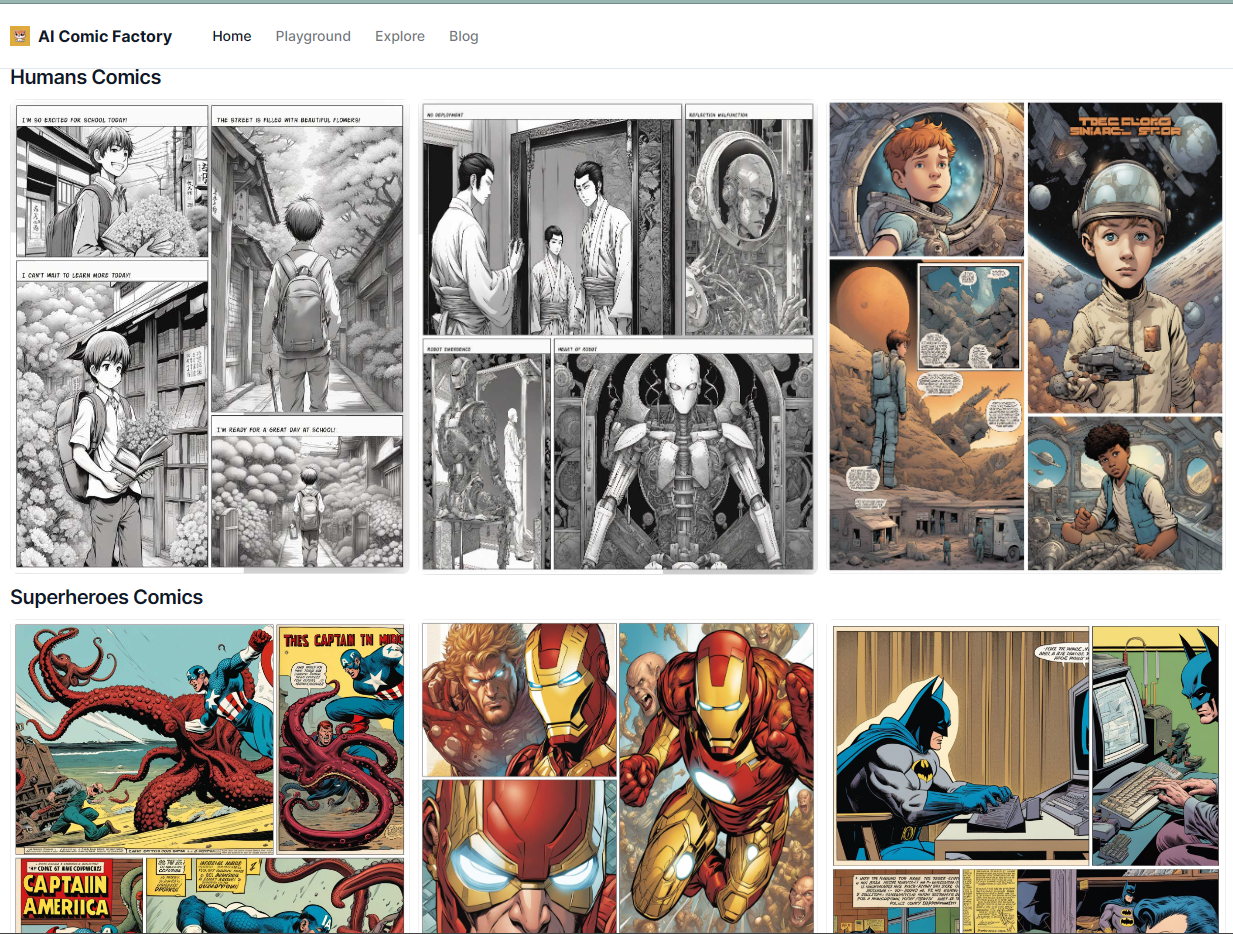

AI Comic Factory

Generate Comic Books with AI

The AI Comic Factory is an innovative project that showcases the power of AI models. It uses Llama-2 70b, a language model that can create coherent and diverse texts, to write the captions for each comic panel. It also uses SDXL 1.0, a stable diffusion model that can generate realistic and high-quality images, to draw comic scenes. The project is open-source and anyone can run it locally with some modifications in the code.

To begin with, We want to emphasize that all components of the project are available as open-source. (see here, here, here, here).

The project cannot be replicated and run instantly since it’s not a single entity. It needs a range of components to operate, such as the frontend, backend, LLM, and SDXL.

If you want to duplicate the project, open the .env you will see it requires some variables.

Provider config:

LLM_ENGINE: can be one of: “INFERENCE_API”, “INFERENCE_ENDPOINT”, “OPENAI”RENDERING_ENGINE: can be one of: “INFERENCE_API”, “INFERENCE_ENDPOINT”, “REPLICATE”, “VIDEOCHAIN” for now, unless you code your custom solution

Auth config:

AUTH_HF_API_TOKEN: If you decide to use an inference API model or a custom inference endpoint, you will need to use OpenAI for the LLM engine.AUTH_OPENAI_TOKEN: Only when you choose to use OpenAI for the LLM engine.AITH_VIDEOCHAIN_API_TOKEN: secret token to access the VideoChain API serverAUTH_REPLICATE_API_TOKEN: in case you want to use Replicate.com

Rendering config:

RENDERING_HF_INFERENCE_ENDPOINT_URL: necessary if you decide to use a custom inference endpointRENDERING_REPLICATE_API_MODEL_VERSION: url to the VideoChain API serverRENDERING_HF_INFERENCE_ENDPOINT_URL: optional, default to nothingRENDERING_HF_INFERENCE_API_BASE_MODEL: optional, defaults to “stabilityai/stable-diffusion-xl-base-1.0”RENDERING_HF_INFERENCE_API_REFINER_MODEL: optional, defaults to “stabilityai/stable-diffusion-xl-refiner-1.0”RENDERING_REPLICATE_API_MODEL: optional, defaults to “stabilityai/sdxl”RENDERING_REPLICATE_API_MODEL_VERSION: optional, in case you want to change the version

Language model config:

LLM_HF_INFERENCE_ENDPOINT_URL: “https://llama-v2-70b-chat.ngrok.io“LLM_HF_INFERENCE_API_MODEL: “codellama/CodeLlama-7b-hf”

You can disregard some variables that are for community-sharing purposes. They are not necessary to use the AI Comic Factory on your own site or device (they are for connecting with the Hugging Face community, and only apply to official Hugging Face apps):

NEXT_PUBLIC_ENABLE_COMMUNITY_SHARING: you don’t need thisCOMMUNITY_API_URL: you don’t need thisCOMMUNITY_API_TOKEN: you don’t need thisCOMMUNITY_API_ID: you don’t need this

For further details, consult the default .env configuration file. You can override any variable locally by creating a .env.local file (this file should not be committed as it may contain sensitive data).

Currently, the AI Comic Factory uses Llama-2 70b through an Inference Endpoint.

You have four options:

1: Use an Inference API model

They have recently introduced a new feature that allows you to choose from the Hugging Face Hub models. It is recommended to use CodeLlama 34b, as it will give you better outcomes than the 7b model.

To activate it, create a .env.local configuration file:

LLM_ENGINE="INFERENCE_API"

HF_API_TOKEN="Your Hugging Face token"

# codellama/CodeLlama-7b-hf" is used by default, but you can change this

# note: You should use a model able to generate JSON responses,

# so it is storngly suggested to use at least the 34b model

HF_INFERENCE_API_MODEL="codellama/CodeLlama-7b-hf"

2: Use an Inference Endpoint URL

To use the AI Comic Factory with your own LLM hosted by the Hugging Face Inference Endpoint service, you need to set up a .env.local configuration file:

LLM_ENGINE="INFERENCE_ENDPOINT"

HF_API_TOKEN="Your Hugging Face token"

HF_INFERENCE_ENDPOINT_URL="path to your inference endpoint url"

If you want to use this type of LLM on your own machine, you can install TGI (Please refer to this post for the licensing details).

3: Use an OpenAI API Key

OpenAI API Key is a feature that is introduced recently. It allows you to access OpenAI API and its capabilities.

To activate it, create a .env.local configuration file:

LLM_ENGINE="OPENAI"

# default openai api base url is: https://api.openai.com/v1

LLM_OPENAI_API_BASE_URL="Your OpenAI API Base URL"

LLM_OPENAI_API_MODEL="gpt-3.5-turbo"

AUTH_OPENAI_API_KEY="Your OpenAI API Key"

4: Fork and modify the code to use a different LLM system

A possible alternative is to turn off the LLM entirely and use a different LLM protocol and/or provider (eg. Claude, Replicate), or a manually crafted story instead (by sending fake or fixed data).

This API allows you to create panel images from text. I developed this API for some of my projects at Hugging Face.

- The hysts/SD-XL Space by @hysts

- And other APIs for making videos, adding audio etc.. but you won’t need them for the AI Comic Factory

1: Deploy VideoChain yourself

You will have to clone the source-code

2: Use Replicate

To use Replicate, you have to create a .env.local configuration file:

RENDERING_ENGINE="REPLICATE"

RENDERING_REPLICATE_API_MODEL="stabilityai/sdxl"

RENDERING_REPLICATE_API_MODEL_VERSION="da77bc59ee60423279fd632efb4795ab731d9e3ca9705ef3341091fb989b7eaf"

AUTH_REPLICATE_API_TOKEN="Your Replicate token"

3: Use another SDXL API

You can customize the code by forking the project and applying the Stable Diffusion technology that suits your needs (local, open-source, proprietary, your own HF Space etc).

You can also try something different, like Dall-E.

0 Comments