AutoGen

Enable Next-Gen Large Language Model Applications

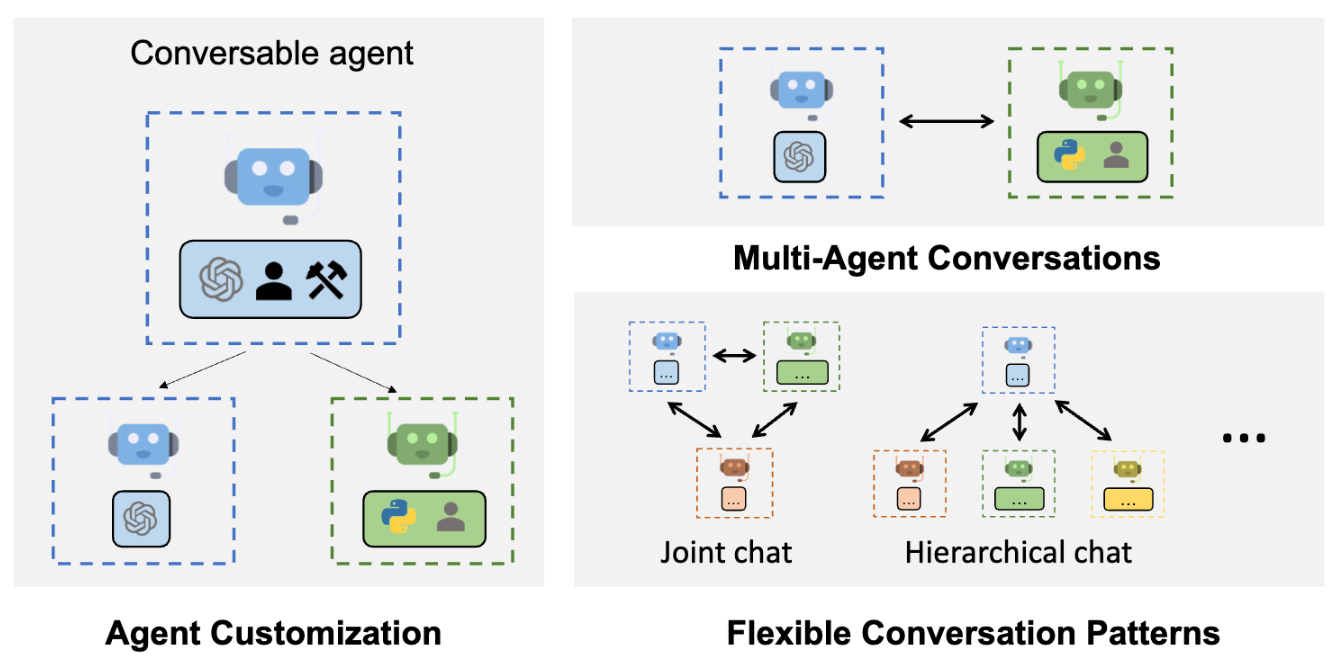

AutoGen is a platform that supports the creation of LLM applications with multiple agents that can communicate with each other to accomplish tasks. AutoGen agents are adaptable, interactive, and smoothly integrate human involvement. They can work in different modes that use combinations of LLMs, human inputs, and tools.

To use AutoGen, you need Python version 3.8 or higher. You can get it from pip:

pip install pyautogen

This will install the basic dependencies. For additional features, you can specify extra options when installing.

See Installation for more options.

We highly recommend using docker and the python docker package for code execution.

For LLM inference settings, refer to the FAQs.

from autogen import AssistantAgent, UserProxyAgent, config_list_from_json

# Load LLM inference endpoints from an env variable or a file

# See https://microsoft.github.io/autogen/docs/FAQ#set-your-api-endpoints

# and OAI_CONFIG_LIST_sample

config_list = config_list_from_json(env_or_file="OAI_CONFIG_LIST")

# You can also set config_list directly as a list, for example, config_list = [{'model': 'gpt-4', 'api_key': '<your OpenAI API key here>'},]

assistant = AssistantAgent("assistant", llm_config={"config_list": config_list})

user_proxy = UserProxyAgent("user_proxy", code_execution_config={"work_dir": "coding"})

user_proxy.initiate_chat(assistant, message="Plot a chart of NVDA and TESLA stock price change YTD.")

# This initiates an automated chat between the two agents to solve the task

Multi-Agent Conversation Framework

Autogen is a generic framework for building next-gen LLM applications that involve multi-agent conversations. It allows users to create and customize conversable agents that can integrate LLMs, tools, and humans. By enabling chat among multiple intelligent agents, Autogen can facilitate tasks that require collective problem-solving, automation, or human guidance, including tasks that involve using tools via code.

Some of the features of this use case are:

✅Multi-agent conversations

Autogen enables communication among different agents to accomplish tasks. This enables more advanced and diverse applications than what a single LLM can do.

✅Customization

Autogen allows users to tailor the agents to their specific application needs. This includes the option to select the LLMs, the human input modes, and the tools to use.

✅Human participation

Autogen smoothly integrates human involvement. This means that humans can interact with and provide feedback to the agents as needed.

0 Comments