Brain2Music

Reconstructing Music from Human Brain Activity

Brain2Music is a method that aims to recreate music from brain activity. It uses fMRI scans to measure how the brain responds to different musical stimuli, such as genre, instruments, and mood. Then, it either retrieves existing music or uses MusicLM, a music generation model, to create new music based on brain activity patterns. The generated music matches the semantic features of the original stimuli. Brain2Music also explores how different parts of the brain and different aspects of MusicLM contribute to the music reconstruction process. It reveals which brain regions encode information from textual descriptions of music stimuli.

The Brain2Music method consists of two main steps: First, It uses MuLan, a neural network that maps fMRI responses to a semantic music embedding space of 128 dimensions. Second, Google Research Team uses MusicLM, a transformer-based language model that generates music conditioned on semantic embeddings. Alternatively, they can also retrieve music from a large database that matches the semantic embeddings, instead of generating it from scratch.

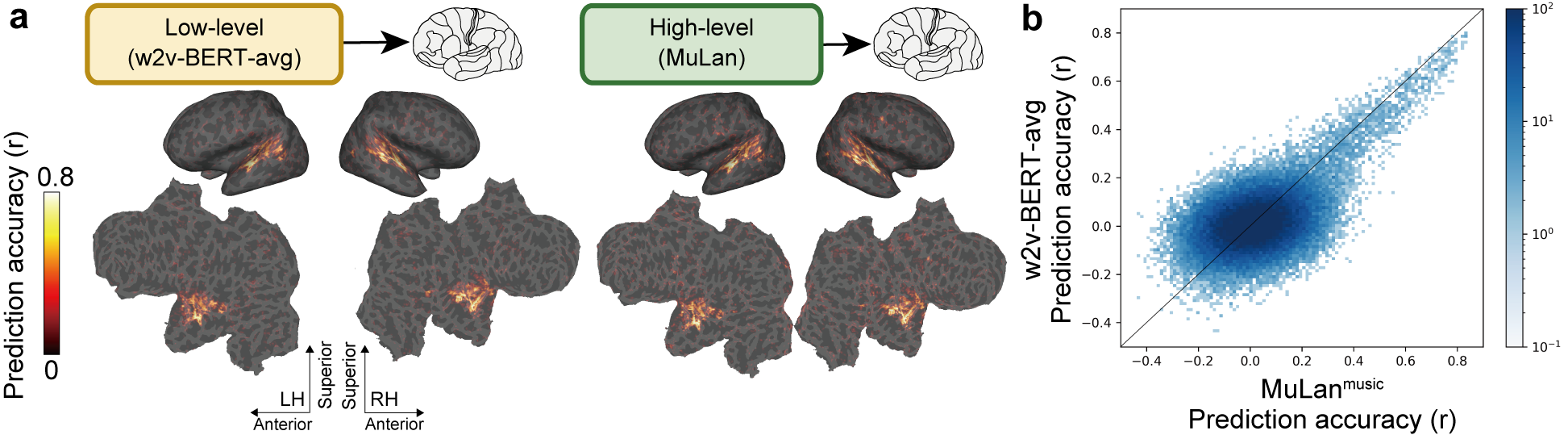

Encoding: Whole-brain Voxel-wise Modeling

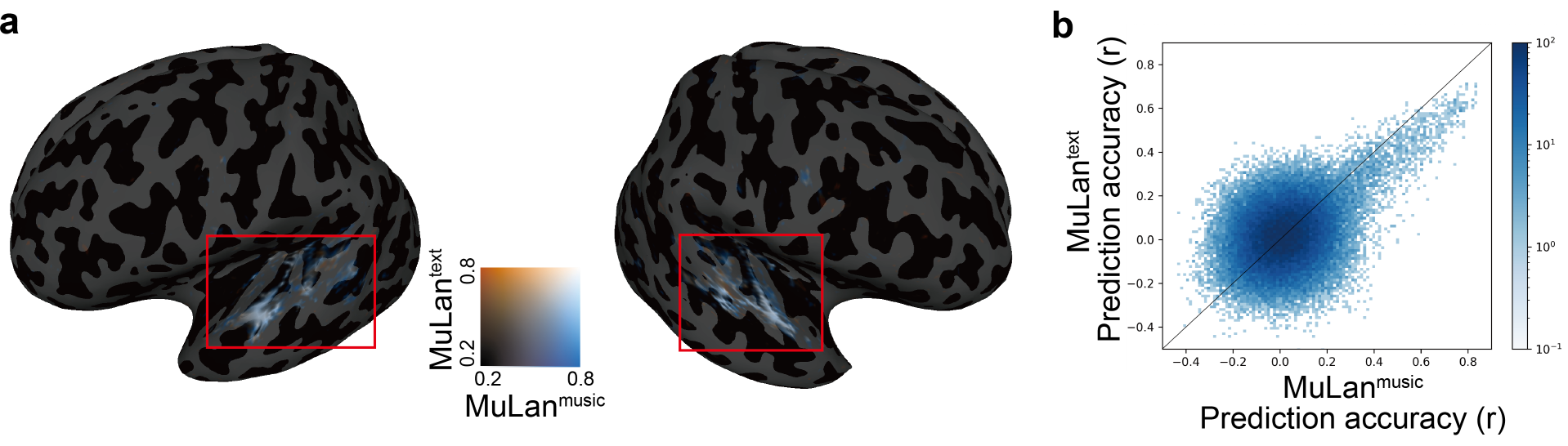

A brain encoding model is used to examine how two components of MusicLM (MuLan and w2v-BERT) relate to human brain activity in the auditory cortex.

The study shows that text and music information share some brain regions.

0 Comments